Random moment of geekiness: DJ walked into my suite talking about the Mel scale, which is the metric that describes distances (in Hz) of frequencies that people percieve as being linearly separated. You know how playing a scale on the piano sounds like a linear progression of notes when it's actually a logarithmic sequence of frequencies? That.

Several minutes later we were comparing notes on our perceptions of the colors of noise (play the .ogg files!) Pink noise (sound file) was particularly entertaining. It decreases in intensity as the frequency rises, but since humans hear high frequencies "louder" than they hear low ones, we hear it as an even hum, whereas white noise (sound file), which weights all frequencies evenly sounds much more biased towards the high end.

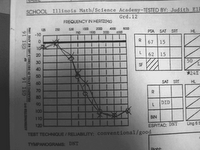

Ironically, my hearing profile acts sort of like an averaging filter; I can't hear high sounds nearly as well as I hear low ones. This means I actually percieve the volumes of sounds (the ones I can hear) closer to their "relative true volumes." That's kind of cool. And it's beginning to become academically relevant since DJ and I have been looking into speech recognition engines and algorithms for SCOPE and research, respectively. How can you process audio to have the smallest files possible while still retaining the ability for humans or computers to understand the speech contained within them? I think the solutions might be able to tell me something about how I'm able to hear people talk (I'm apparently not supposed to be able to understand speech as well as I do.)

Ironically, my hearing profile acts sort of like an averaging filter; I can't hear high sounds nearly as well as I hear low ones. This means I actually percieve the volumes of sounds (the ones I can hear) closer to their "relative true volumes." That's kind of cool. And it's beginning to become academically relevant since DJ and I have been looking into speech recognition engines and algorithms for SCOPE and research, respectively. How can you process audio to have the smallest files possible while still retaining the ability for humans or computers to understand the speech contained within them? I think the solutions might be able to tell me something about how I'm able to hear people talk (I'm apparently not supposed to be able to understand speech as well as I do.)

On a semirelated note, I was shocked to find out this week that product design for accessibility for the deaf is pretty much nonexistent. I'm not talking about hearing aids and TDDs; those are product designs for the deaf. I mean making things that are used by everyone more accessible to deaf people - the equivalents of wheelchair ramps for buildings. I was fortunate enough to be an early and avid reader, so my difficulty in navigating the world is minimal; if it's captioned or has words on it, I'm fine.

Turns out this isn't the case for most. Half of deaf and hard-of-hearing 17-18 year olds read at or below a fourth grade level. (The grammar and vocabulary set of sign language is very different from that of written/spoken colloquial American english, hence the problem.) Picture a deaf teenager who wants to go to college. They can't hear lectures, can't hear their classmates discussing the material in the hallways, and can't read their textbooks. How are they supposed to access and understand higher-level academic information?

There is a misconception that "if it's captioned, it's accessible to deaf people." I am not sure how widespread - or how true - that statement really is, but I want to find out. One of the user interface design mailing lists I subscribe to mentions Sesame Street as a particularly egregious example. Sesame Street teaches little kids to read. And to expect a deaf toddler to read closed-captions in order to understand a television show that's trying to teach her how to read... well, you see a slight problem here.

I am really, really lucky. I was born hearing, so I learned that there was speech and that people communicated with it, and that this speech corresponded to those funny marks on paper. So when I lost my hearing, I was somehow able to make the mental jump to communicating almost entirely through those funny marks on paper as a way to get the equivalent information that the other kids got through speech. I learned to read fast at a very young age, and have now made it through 15 years of school on the strength of my ability to blaze through written texts. If it weren't for my reading abilities, I can almost guarantee you that I would have been labeled as a kid with academic difficulties, not a "gifted" one. If you've ever been in a class with me where I didn't have a good textbook, you know it's true. In fact, those classes correspond very, very closely to the almost-failing grades in my transcripts (and trust me, I've got some).

There's a weird sense of responsibility that since I'm an engineer and interested in product design, that I ought to see what I can do for the deaf kids who, for whatever reason, have a hard time navigating the hearing world as adroitly as I've learned how to. Sort of like a poor kid from a third world country that somehow manages to get educated and become successful; you feel an obligation to do something for the folks that got "left behind."

But I also have a lot to learn about the deaf community and culture before I can do much about it. "Engineer's arrogance" is a very dangerous thing. And in a sense, I've been "left behind" out of the deaf community as much as they've been "left behind" in the hearing one, so it'll be very difficult for me to design things that will help them within their world, within ours, or to bridge the two (and do they even want the two latter ones? I don't know).

How can I get started? Would anybody else like to help?

Addendum of Interesting links: a recounting of his university experience by a deaf engineer who works in product design, an article on web accessibility for the deaf by Joe Dolson, and another one which has two great little quicktime videos that illustrate my frustrations with closed captioning (I sometimes follow movies in the lounge by reading along with the film transcripts as we watch).